But what makes up a game engine? There are many different game engines, each aimed at different types of games and each developed with different goals in mind. But most engines include these components:

First off you have the device drivers component which is essentially a "layer" of code that allows the engine to interact smoothly with the hardware (example: graphics card) of the device (example: PS3) in which it is installed.

Then you have the operating system component of the engine which is mainly related to the operating system (example: windows) of the device you are running the engine on. It's main purpose is to make sure that your game "behaves" with the operating system and the other programs associated with it. In other words, it makes sure that your game doesn't eat up all the resources that should be shared between programs in a computer, mainly memory and processing power.

There is also the Software Development Kits (SDKs)component of the engine. Most engines rely on a number of SDKs to provide a good number of the functionality and tools of the engine such as data structures, graphics, and AI. In other words, a game engine may make use of another engine specifically geared for a certain purpose, like a a physics engine, to provide some of the necessary tools for the developers.

Then there is the platform independence layer component of the engine which essentially makes sure that all the information coming from the above components, which may change between platforms (PS3, Xbox 360, Pc, etc.), and makes sure that they are all compatible with the other parts of the engine.

The core systems component of the engine more or less handles the "administrative" functions of the engine, mainly providing mathematical libraries, memory management, custom data structures, and assertions (essentially an error-checker).

Resources Manager component makes sure that the rest of the engine or the game is capable of accessing all the different resources needed for the game regardless of their file type.

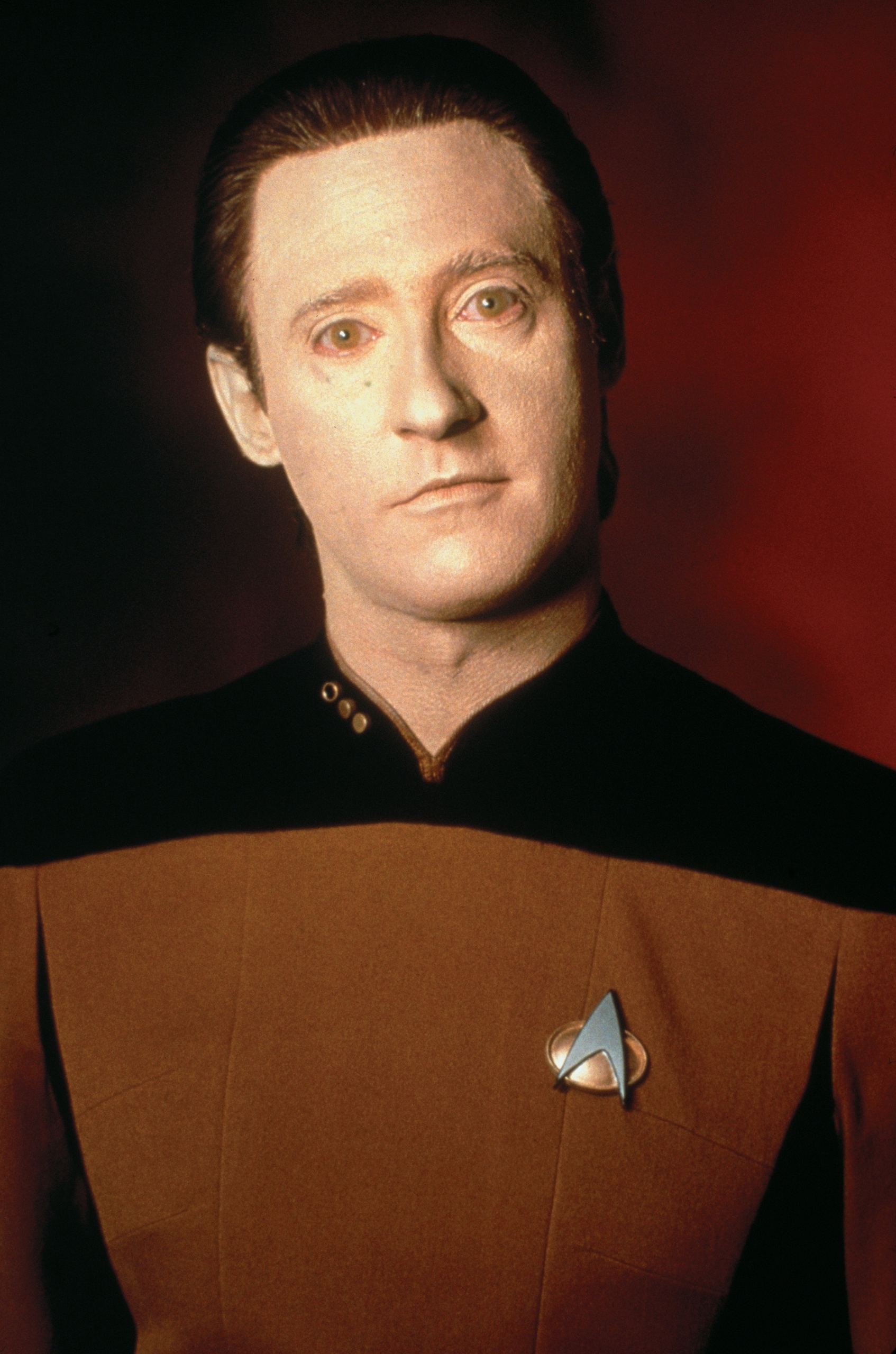

Now here is an interesting bit, the resource engine. Yes, I know we are already talking about a game engine. But you know what? Game engines are engine with engines who might also have engines. Engine-ception! But back on topic, this part of the engine deals with just about everything to do with making things appear on screen from the menus, to the 3D models, to the particle systems (everyone loves particles!).

|

| You with particles. |

|

| You without particles. |

|

| Found one! |

Making these components takes time and resources both of which could be better used for making awesome games that will not only make the dev team and you happy but will also make sure that the team keeps making money which keeps the company afloat which makes the dev team really happy.

The animation component of course deals with how things move in the game. so in other words how a character walks is handled by the animation component of the engine.

The Human Interface Devices (HIDs) component mainly deals with the devices we, as humans, create input for the game. Essentially this is the part of the engine that deals with controllers. Whether they be mouse and keyboard, gamepad, or some other device, the HID component handles it.

The next part is the audio section which uuh deals with audio. Essentially making sure that the sounds and music are handled correctly to provide the best experience possible. Though audio is sometimes overlooked which is sad given the integral part that audio plays in a a game. Want proof? go into the options of a video game and turn down the music volume to zero and see how that effects the gameplay.

The next component of the engine deals with multiplayer functionality of the engine. Meaning that it deals with split screens, the multiple inputs from the different players, and in regard to online play the networking of the separate consoles. This component is called...well... the multiplayer and networking component. Look! these are very efficient ok?

Next we have the Gameplay Foundation Systems. Where as the other components deal with how things act in certain ways, this component allows those things to act in that way. In other words this is the rule keeper of the engine, it dictates which things move or done move and what those things are capable of doing. So if your character can shoot fireballs, its because the Gameplay Foundation Systems have deemed it so. This component is sometimes written in a scripting language which allows developers to make changes to the game without having to recompile the game, which saves precious time.

Lastly, there is the Game-Specific Subsystems component which is more or less the creation of new parts for the engine that will produce the specific effect the developers needing for the game.

Finally! Now, of course what I went over in this blog is just an simple overview. There is so much more to these components and I encourage you to research further into them should you desire to do so.

sources

Gregory, Jason.Game Engine Architecture. Boca Raton: CRC Press, 2009.

http://evansheline.com/wp-content/uploads/2012/04/happy-dog.jpg

http://cutepics.org/wp-content/uploads/2011/10/sad-puppy.jpg

http://wpmedia.blogs.calgaryherald.com/2013/01/starship-troopers.jpg

http://i2.kym-cdn.com/photos/images/original/000/185/168/misc-jackie-chan-l.png

http://www.geek.com/wp-content/uploads/2013/11/OculusRift1.jpg

http://thegamerspad.net/wp-content/uploads/2013/10/wallpaper__glorious_pc_gaming_master_race_by_admiralserenity-d5qvxos.png

Gregory, Jason.Game Engine Architecture. Boca Raton: CRC Press, 2009.

http://evansheline.com/wp-content/uploads/2012/04/happy-dog.jpg

http://cutepics.org/wp-content/uploads/2011/10/sad-puppy.jpg

http://wpmedia.blogs.calgaryherald.com/2013/01/starship-troopers.jpg

http://i2.kym-cdn.com/photos/images/original/000/185/168/misc-jackie-chan-l.png

http://www.geek.com/wp-content/uploads/2013/11/OculusRift1.jpg

http://thegamerspad.net/wp-content/uploads/2013/10/wallpaper__glorious_pc_gaming_master_race_by_admiralserenity-d5qvxos.png